Mode Connectivity and Batchnorm

Mode Connectivity

Mode Connectivity describes a peculiar phenomenon in Deep Learning. When training networks we are looking for (local) minima of the loss function. Finding these is a hard problem, but - surprisingly - Neural networks tend to converge pretty well.

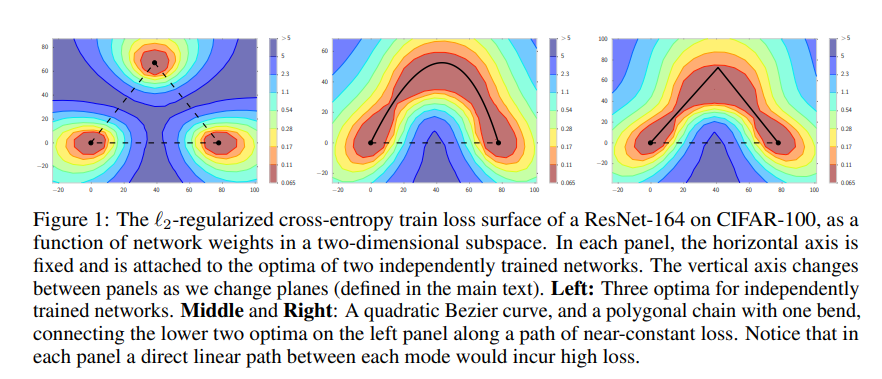

Not only that, but different local minima seem to be connected. I.e. it seems to be the case that we can often find a path between the weights of two equally well performing networks, where all weights on the path are also a well performing network. In other words, points of low loss seem to be connected.

These paths are not necessarily linear, as a paper shows.

But why would that be the case? Shouldn’t a good performing network be rare?

It depends.

In fact, we will argue, that Mode Connectivity is very unsurprising in Neural Networks that use ReLU and Batchnorm or are overparametrized.

Batchnorm

A Batchnorm layer recenters the data by substracting the mean and dividing by the variance. The Idea of Batchnorm was to make Neural networks more stable in training. The layer has become widely used and much research is still being done analyzing how and why it often works so well.

But we are interested in something else: Since Batchnorm normalizes the output of a layer, we loose some expressivity. In fact we become invariant to exactly two values: The bias and the scale of the weights. In other words, if we use a Batchnorm layer, we can scale the weights of the previous layer arbitrarily without changing the resulting function. 1

Now imagine we have two Neural networks with weights $\theta_1$ and $\theta_2$ that use Batchnorm for every layer. In theory we can scale down all parameters of $\theta_1$ except for the Batchnorm parameters, and do the same with $\theta_2$. In doing so the networks move closer to each other, as most parameters become more similar with the exception of the few parameters saved in the Batchnorm layer.

This alone might create a path as shown above. In fact, depending on final non-linearity, even the scaling in the batchnorm layers can be safely ignored.

Overparametrization

This is an argument I read in a paper I cannot find (TODO find this). The argument went roughly as follows:

If a fully connected layer has enough parameters, we can find some parameters the are unneccessary. Using these parameters we can smoothly transform the weights of such a layer to any permutation of the weights without ever changing the output of the whole network.

This means that all networks that encode the same function are (often) connected to each other. In this case Mode Connectivity might be trivial. Simply move to the permutation of weights that is closest and then scale the weights until we have a reasonably similar function. The rest of the difference is then easily bridgeable.

Notes

If Mode Connectivity can be completely explained by permutation invariance and Batchnorm we have to conclude that Neural networks mostly converge to the same solution - albeit encoded differently in the weights. This would have very interesting implications for research and training of Neural Networks.

-

We have to be careful here, as Batchnorm does not calculate this scaling dynamically, but instead learns the parameters. ↩