Will we see User customized LLMs

Spoiler: Yes

LLMs provide amazing capabilities. They can solve many tasks, write texts for you, build apps. But currently they are not very customizable.

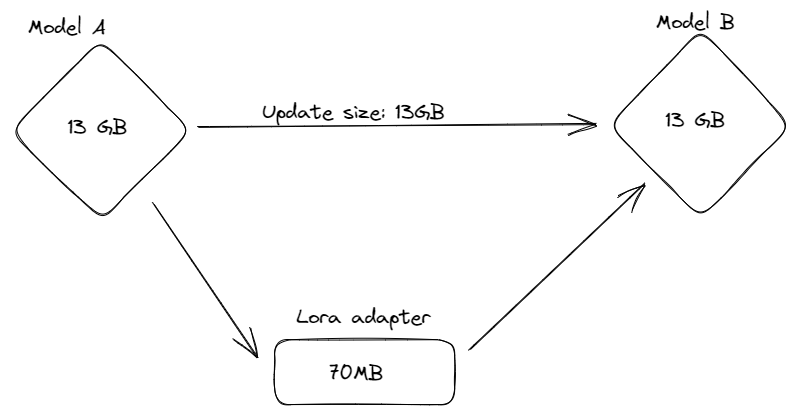

“But that is completely reasonable” you might say. “The smallest models are already very big, it does not make sense to create a 13GB model for every user.”

You don’t need 13GB. 70MB per User are basically enough.

Stanford released the Alpaca model, a finetuned version of LLama7B that behaves similarly to text-davinci-003 (i.e. GPT-3). They did this by finetuning LLama on text generate by text-davinci.

You can download the model, it’s 13GB.

OR if you already have the LLama model, you could download an update for 13GB.

OR if you already have the LLama model, you could download the Alpaca-Lora model, which is 70MB.

“How to save 13GB of space with this one simple trick”

Lora stands for “Low-Rank Adaptation” and works by learning a very low rank factorized update. This enables massive savings in terms of model size. This is already amazing, but think about what this means in the long term.

We can already run LLama locally with LLama.cpp. In theory, a large player, such as Google or Meta or Apple could create one baseline model, and learn a fine-tuning for every single user that only takes around 70MB per user. This can be a personal assistant perfectly suited for your needs (in a good world) or a very good simulation of your behavior and interests (in a bad world).

Either way, creating and saving these models is not only technically possible, but easy.

You could have a model that always talks like a sailor, a psychotherapy model, a teacher model. And all of these don’t need an additional prompt, but instead use a 70MB Lora adapter.

We already see this in Stable Diffusion Models, which have a plethora of LORA models. As Stable Diffusion is small enough to be finetuned on private hardware, there has been an explosion of alternatives, finetunings and LORA models. You can find most of them on websites such as CivitAI.

For example, here is a LORA model which does not produce arbitrary images, but instead focuses on creating Anime-style tarot cards.